FREQUENTLY ASKED SNOWFLAKE CLOUD DEVELOPER INTERVIEW QUESTIONS

In continuation of Snowflake Developer Interview Questions and Answers (Part 1).

Topics Covered are Snowflake NVL2 and NVL function, Warehouse, STREAM, External table, types of tables in snowflake, Time travel, Fail-Safe, and materialized view.

Let's jump right away into the question.

Q1: What is Snowflake URL?

Q2: Explain the difference between NVL2 and NVL function?

- If expr1 is not null, it returns expr2

- If expr1 is null, it returns expr3

- If expr1 is not null, it returns expr1 itself

- If expr1 is null, it returns expr2

|

| NVL2 , NVL query |

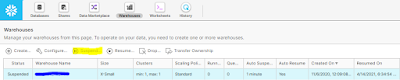

Q3: Is it possible to suspend a warehouse manually?

|

| Snowflake |

WITH warehouse_size=’MEDIUM’;

ALTER WAREHOUSE [ IF EXISTS ] name SUSPEND|RESUME;

Q4: Difference between DBMS and RDBMS?

DBMS :

- Abbreviation - Database Management System

- Stored in hierarchical form

- Abbreviation - Relational Database Management System

- Stored in tabular form

Q5: What happens you suspend a warehouse?

When you suspend a warehouse, snowflake suspends all idle servers for the warehouse, but allows any server that are executing statements to continue until the statements complete, at which time the servers are shut down and the changes the to suspend mode.

Q6: What is stream?

The stream will create three extra columns (METADATA$ACTION, METADATA$ISUPDATE, and METADATA$ROW_ID).

Based on it is decided whether the incoming record is new, deleted, or updated.

Metadata$Action | Metadata$IsUpdate | Scenario |

INSERT | FALSE | Insert - New record |

DELETE | FALSE | Delete - Deleted record |

INSERT | TRUE | Update - Updated record |

DELETE | TRUE |

Q7: What is an external table?

WITH

LOCATION = @stage_name

AUTO_REFRESH

= true

FILE_FORMAT

= file_format_name;

Q8: What are the types of tables in Snowflake?

Temporary Table – Stores non-permanent, transitory data.Only exists within the session, will not be visible to other users.

Syntax : Create

temporary table mytemptable (id number, creation_date date);

Permanent Table – Available until explicitly

dropped.

Has fail safe period.

Transient Table - Available until explicitly dropped. Doesn’t have fail safe period, can prefer when the data doesn’t require same level of data protection and recovery as permanent table.

Syntax : Create

transient table mytranstable (id number, creation_date date);

Q9: Difference between Time travel and Failsafe?

Time Travel

- Access historical data that has been changed or deleted at any point within defined time period. (SELECT, CREATE … CLONE, UNDROP)

- Retention period – 1 to 90 days (default 1 day)

- If retention period for time travel ends, it will move to fail safe.

Fail safe

- Historical data is protected in case of system failure

- Data is recoverable only by snowflake.

- Retention period – 7 days for Permanent table, 0 days for transient table

Q10: Difference between materialized view and normal view?

|

Materialized View |

View |

|

Data is stored on disk |

Holds the data virtually |

|

Updated periodically based on query definition |

Refresh only when accessed |

|

WHEN TO USE – Resultant doesn’t change often |

WHEN TO USE - Resultant changes often |

|

View is used often |

View that is not used often |