FREQUENTLY ASKED INFORMATICA INTERVIEW QUESTIONS

In this blog, we are going to explore some of the most frequently asked Informatica interview questions. Hoping that it will be helpful and wishing the best for your interview.

Brief: Informatica is one of the most widely used ETL data integration tool for loading data and export functions.

It has its own transformation language that you can use in your expressions, filters, and such.

Let's move on to the questions.

Q1: What is database, datawarehouse, and datamart?

The database stores different kinds of data, however, it is smaller than the data warehouse.

Datawarehouse is used to store historical data.

And datamart is a subset of data warehouse, which is used for analytical purposes like prediction.

Q2: What are the types of dimension modeling? Or What are the different types of schema?

Dimension modeling or schema is of two types.

1) Star Schema

2) Snowflake Schema

Detailed Explanation:

Star Schema:

- Dimensional tables are not normalized

- Dimensional tables are not linked to each other

|

| Star Schema |

Snowflake Schema:- Dimension tables are normalized

- Dimension tables are divided into sub-dimension

|

| Snowflake Schema |

Q3: What are the different types of Slowly Changing Dimension (SCD)?

Type 0 - Fixed dimension. No changes allowed i.e. data never changes.

Type 1 - No history. Updates the record directly, only current data is stored and no historical data.

Type 2 - Maintains history by storing the value as a new record.

It is of three types.

1) Flagging (Y/N)

2) Versioning ( Sequenced by numbers)

3) Effective date ( Start date and End date)

Type 3 - Previous value column. Maintains

history as the current and previous version column-wise.

Q4: What are the differences between connected lookup and unconnected lookup?

|

Connected Lookup

|

Unconnected Lookup

|

|

The input value is received directly from

pipeline

|

The input value is received from the result of

:lkpexp

|

|

Dynamic or static cache can be used

|

Only static cache can be used

|

|

Returns multiple columns

|

Returns only one column

|

|

If no match returns, a default value will be

returned

|

Returns NULL

|

|

User-defined default value

|

Doesn’t support the user-defined default value

|

Q5: How to optimize a lookup transformation?

1) If the lookup table database is the same as the source, then perform the join in the database itself.

2) Apply filter to minimize the loop-up rows.

3) Optimize the lookup condition by commenting default group-by clause.

Q6: What are the different types of keys?

Primary key - Uniquely identifies each record. Doesn't allow NULL value.

Foreign key - Primary key from one table, which has a relationship with another table.

Surrogate key - Is the natural prime key, which is generated but not based on the database data.

Unique key - Uniquely identifies each value. Allows NULL value.

Q7: What are the files created by information during session runs?

1) Error log

2) Session log

3) Workflow log

4) Bad file/ Rejection file

Q7: What is pushdown optimization and its limitation?

Analyzes the logic, mapping, and session configuration

to determine the logic to push to the database.

Types of pushdown optimization,

None – Does not push any logic

Source – Pushes the logic as much as possible to source

Target - Pushes the logic as much as possible to target

Full - Pushes the logic as much as possible to source and target

The limitation of pushdown optimization is that RANK transformation cannot be pushed.

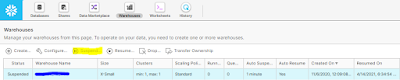

Q7: Based on what factors session logs can be retained in Informatica?

The session logs can be retained based

1) Number of runs

2) Timestamps

|

| Session properties |

It can be configured in session property under the config obj tab as shown in the above image.

Q8: What is PMCMD?

The PMCMD is an in-build line program that communicates with the integration service to start, stop, schedule or monitor a workflow.

Q9: What is a router transformation and what are groups available in the router transformation?

Router transformation is an active and connected transformation that is similar to filter transformation.

But the router gives multiple output groups and each output group can hold different filters.

Unlike filter transformation, all the records that don't satisfy any of the condition is pushed to the default group.

|

| Router property |

Types of groups in the router,

1) Input group

2) Output group

3) User-defined group

4) Default group

Q10: Difference between filter and router transformation?

|

Filter

|

Router

|

|

Only one condition can be applied

|

Multiple conditions can be applied

|

|

Can use only one target

|

Can use multiple target

|

|

Returns multipe columns

|

Returns only one column

|

|

Rejected rows cannot be captured

|

Rejected rows can be captured by the

default group

|

Read More :